originally published on Gamasutra

Although I am not a specialist in AI for video games, I want to highlight an important complement to AI: properties of the design of non-player characters (NPCs) informed by cognitive psychology findings. With this article, I want to provide a simple framework for those interested in the psychological foundations of the practice of video games. In doing so I will advocate a sort of minimalism in the conception of animated characters. Minimalism is key for two main reasons: First, because what matters the most when trying to depict convincing figures is the realism of the behavior rather than the realism of the appearance. Second, because the brain proceeds by reducing the complexity of visual scenes to a few meaningful chunks of information. I suggest AI developers could benefit from knowing exactly which elements of behavior meaning the brain extract from minimal visual scenes. Having this knowledge means that, without requiring computationally taxing algorithms, it is indeed possible to exploit psychological resources to create lively and interesting characters in video games. Such an undertaking has already started, with the advent of non-antagonistic NPCs, that I will call companions. They will serve as an illustration of the way movement patterns are exploited to give the illusion of life and intelligence.

What’s a companion for?

For long, NPCs were practice targets. Augmenting their intelligence was merely a means to increase the difficulty of shooting them. But during the last ten years or so, video games have introduced a different kind of NPC: companions. Companions have a different functions than challenging your shooting skills, they thrive on your propensity to bond with what’s alive. Companions serve mainly two purposes. Like Virgil who guided Dante through Hell and Purgatory, certain companions serve as a tour guide and personal tutor. Other companions are like Eurydice who, in the grec mythology, was waiting for Orpheus to rescue  her from the Underworld. They hamper the progress but provide a considerable emotional weight to the quest. This is for instance what Ashley does in Resident Evil 4. This fragile, some might say annoying, creature is to be looked after. Although the presence of this character adds vulnerability to the player, it is also an efficient way to enforce a dramatic tension. Elisabeth, in Bioshock Infinite, is of the Virgil kind, she is mostly a narration tool. She becomes almost invisible when action is at the forefront and reappears once peace has returned. Much like the dog in the Fable series, she explores the environment and may give some hints to the player as to the location of useful items. The fact that the player has never to take care of her may lessen the strength of their relationship. But Elisabeth is a lively creature that embodies a unique perspective on the world. She is interesting just by the way she stands in front of you, as an autonomous being. Yorda, in Ico, is somewhere in between, both a supportive and a fragile presence that you have to protect.

her from the Underworld. They hamper the progress but provide a considerable emotional weight to the quest. This is for instance what Ashley does in Resident Evil 4. This fragile, some might say annoying, creature is to be looked after. Although the presence of this character adds vulnerability to the player, it is also an efficient way to enforce a dramatic tension. Elisabeth, in Bioshock Infinite, is of the Virgil kind, she is mostly a narration tool. She becomes almost invisible when action is at the forefront and reappears once peace has returned. Much like the dog in the Fable series, she explores the environment and may give some hints to the player as to the location of useful items. The fact that the player has never to take care of her may lessen the strength of their relationship. But Elisabeth is a lively creature that embodies a unique perspective on the world. She is interesting just by the way she stands in front of you, as an autonomous being. Yorda, in Ico, is somewhere in between, both a supportive and a fragile presence that you have to protect.

What it takes to establish a solid bond

Game characters’ lifelike qualities are often discussed in terms of their resemblance to a human model, rather than to the complexity of their behavior. As you may know, there is a puzzling effect in robotics, coined the uncanny valley by the Japanese roboticist Masahiro Mori. When an android becomes too realistic, people tend to feel repelled, or even disgusted. The closer the resemblance to a human being, the more people tend to focus on the differences that separate the android from a real human. Mori also noticed the role of movement in the uncanny valley effect. An android may look weird to you because it is not able to reproduce the smoothness of natural movements. However realistic its skin may be, a robot still falls behind when trying to be graceful. Not being meat and bones, a robot cannot take advantage of the stiffness and elasticity a biological muscular system is endowed with. But you may be more inclined to excuse a clunky behavior in a robot that does not pretend to mimic a human being. Think about a Roomba for instance, its unpretentious aspect doesn’t preclude a certain evocativeness. OK it’s a bland piece of plastic. OK it may look awkward and limited. But one can still appreciate the way it moves, the autonomy it conveys, and perhaps even a sense of stubbornness as it cleans relentlessly your floor.

Going one step further in the direction of simplification of appearance, you could imagine a spot moving on a screen. What would it takes for this spot to look alive? This is a question animators are willing to answer. And cognitive psychologists too. Both want to know the basic patterns of movement that provoke an impression of aliveness. The spontaneous bond that we seem to imbue in everything that looks remotely alive does not depend so much on a familiar appearance. Rather, how evocative an animated object looks depends on its behavioral realism. And how realistic a behavior is depends on the movements that the brain recognizes as meaningful patterns of activity.

We are going to examine the crucial components of this notion of behavioral realism. Following three stages —which correspond to the questions one can ask when observing the behavior of an animated object— I will highlight the core properties of the process of attributing psychological traits to an object.

Is it alive?

The visual system is an exquisite mechanism. It draws various kinds of inferences about the world. It can tell you how far an object is based on the difference between the images projected on your right and left eyes. It can complete the missing parts when an object is partially visible. It can tell you approximatively how many peanuts there are in the jar, and how large the glass of beer is so you can hold it appropriately. It is also quite capable in dealing with the mechanical properties of a dynamic scene. If you see a collision between two objects, you can’t help but perceive a causal relationship between the object you identify as provoking the collision to the object that you interpret as receiving the collision. As pointed out by the Scottish philosopher David Hume, there is nothing in the sensory experience that can signal the causal relationship. Causation is a matter of the mind that lumps together pieces of experience into a unified percept. We are also aware of certain constraints that shape the movement of objects. We know and expect that an object without support is going to fall down, that a moving object is eventually going to decelerate as a result of friction, or that a movement if undisturbed has to continue in a straight line. Apparent violations of these constraints —when for instance an object moves on its own, or suddenly accelerates—constitute precious indications that some non observable causes are influencing its movement. Numerous experiments in psychology show that when confronted to these types of motion cues, observers spontaneously adopt another system of interpretation that the one they would use for inert objects. They tend to perceive the object as something that looks alive and that has a certain control over the way it moves.

It doesn’t take a familiar face, or some fancy beast outfits to create life. Any simple geometrical figure would do, providing it moves the right way. More precisely we can distinguish two components that characterize the process of attributing aliveness to a moving object:

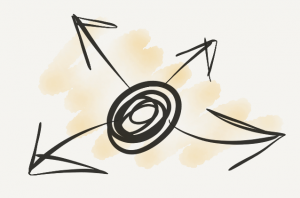

Spontaneity: this is the propensity to initiate a movement and spontaneously change speed or direction (even without interaction with an external object). The more spontaneous, the more lively an object is likely to appear.

Directness: this is the consistency of a trajectory over a certain period of time. The more often an object changes speed or direction, the less controlled its behavior will look.

Spontaneity determines the overall liveliness of the movement. Directedness determines how an object appears to be in control of its movement. A movement without spontaneity looks rigid, mechanic. A movement without directedness looks random, unconstrained. You need to adjust both parameters to make something look alive. The movement you impart to the object has to give the impression of being self-generated, yet controlled enough that it doesn’t appear purely random. This is because life is something that imposes dynamic and transient structures to the world. In essence, spontaneity represents the spark of life, and directedness the ephemeral organization that constitutes a gesture.

We can illustrate these notions taking some examples from Super Mario. For instance Koopas move in a straightforward fashion until they hit an obstacle and reverse their direction. Although they initiate their movement spontaneously, further changes in their trajectory are induced by the environment. They score low both on spontaneity and directedness, because their movement is almost entirely constrained by the ground on which they evolve. As a result their behavior is rather dull and predictable. Paratroopas have a more interesting behavior. Because they are not constrained by the ground, they do not move in such a straightforward way. Instead they oscillate slightly on an otherwise rectilinear trajectory. Bloopers are even more interesting. They score high on spontaneity as they often change direction. They score relatively high on directedness as they maintain a rectilinear trajectory for a few seconds before changing direction.

Is it an intentional being?

Animals are autonomous, in the sense that they do not rely on an external source of energy to move. But being autonomous doesn’t mean  that an organism can produce a sophisticated representation of the world, and adjust its behavior accordingly. Think about a fly buzzing around a room. It looks undoubtably alive but we may be reluctant to qualify its behavior as intentional. Most NPCs in video games are like the fly: they roam around until something catches their limited attention. Zombies of course are the beloved roamers of video games. They don’t look very bright, their behavior is not oriented enough for our naive psychology to consider them otherwise. But at least they’re doing something that looks intentional: they chase you. A chasing behavior is the basis of a game AI. Psychologically speaking, this a step toward making the player’s perception of an intentional behavior.

that an organism can produce a sophisticated representation of the world, and adjust its behavior accordingly. Think about a fly buzzing around a room. It looks undoubtably alive but we may be reluctant to qualify its behavior as intentional. Most NPCs in video games are like the fly: they roam around until something catches their limited attention. Zombies of course are the beloved roamers of video games. They don’t look very bright, their behavior is not oriented enough for our naive psychology to consider them otherwise. But at least they’re doing something that looks intentional: they chase you. A chasing behavior is the basis of a game AI. Psychologically speaking, this a step toward making the player’s perception of an intentional behavior.

Compared to the previous stage, this is where we start considering goal-oriented behaviors. Previously we only mentioned local cues from motion, such as spontaneous changes of direction. However, when designing NPCs with apparent goals or intentions, we need to consider their relationship with distant objects or events. Especially important is the reactivity of the character to events occurring in its surroundings. The perceived reactivity of a given NPC depends on the human ability to detect contingencies between objects and agents. A contingency is a non-accidental relation between a movement and another one.

Contingency:

this is the connection between events. We talk about mechanical contingencies when referring to physical connections involving physical forces; and of social contingencies when causation takes place at a distance, based on intentional properties rather than physical ones.

Contingency:

this is the connection between events. We talk about mechanical contingencies when referring to physical connections involving physical forces; and of social contingencies when causation takes place at a distance, based on intentional properties rather than physical ones.

Illustrating this notion, let’s remember Ico‘s Yorda: one of the most exquisite NPCs in the history of video games. This most graceful character successfully conveys a social presence by implementing certain contingencies. She turns her head in direction of Ico or in direction of an immediate danger. She slightly steps back when Ico is approaching too close from her. Remark however that she possesses some measure of autonomy. Her behavior is not being perfectly contingent on external cues. You will see her walk away at some occasions. This is a very important property if you want to convey the illusion of freedom and self-sufficiency in a companion.

An important aspect of contingency is temporal contiguity, i.e., the short delay between two events —for instance something that your avatar is  doing and the reaction from an animated character. The human visual system is very sensitive to ruptures of the temporal continuity in an action sequence. For instance imagine a sequence involving two objects. One moves in direction of the other, until it contacts it. The second object starts to move as soon as the first has touched it. In such conditions, you couldn’t help having the feeling that the first object has given a push to the second object. But introduce a delay when the two objects collide and it will alter the causal impression. A temporal delay as short as 50 ms between the two movements suffices to break the causal illusion. You would see instead two disconnected movements. In terms of intentional behavior, temporal contiguity is going to determine the perceived reactivity of an animated character, and possibly its degree of intelligence.

doing and the reaction from an animated character. The human visual system is very sensitive to ruptures of the temporal continuity in an action sequence. For instance imagine a sequence involving two objects. One moves in direction of the other, until it contacts it. The second object starts to move as soon as the first has touched it. In such conditions, you couldn’t help having the feeling that the first object has given a push to the second object. But introduce a delay when the two objects collide and it will alter the causal impression. A temporal delay as short as 50 ms between the two movements suffices to break the causal illusion. You would see instead two disconnected movements. In terms of intentional behavior, temporal contiguity is going to determine the perceived reactivity of an animated character, and possibly its degree of intelligence.

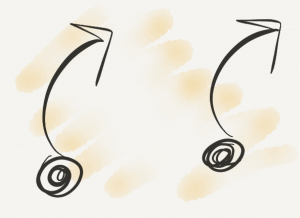

Contingency is but one aspect of goal-oriented behavior. Imagine a dot moving on your screen. The dot moves toward a specific direction, until an invisible force — a gush of wind say— repulses it in the opposite direction. Imagine that after a while, the dot resumes its movement in direction of the same location, and is repulsed once again. Imagine that it does that several times. What would you conclude from this sequence of movements? Probably that the dot is stubborn, foolish, courageous, or blind to its inevitable fate. All those descriptions tap into a set of intuitive principles that psychologists sometimes call “naïve psychology”. Naïve psychology is about goals, motives, intentions; it is about the reasons for which someone does something. When observing the dot moving, you implicitly infer from the consistency of its trajectory that it ‘wants’ to reach a particular location. From the patterns of acceleration, deceleration and deviations from the main trajectory, you infer the constraints that it faces. And you can also infer certain personality traits that give another layer of explanation to the observed behavior.

Beyond the attributions of intentions and personality traits, you may also wonder why the dot wants to reach the corner of the screen. For humans, this is often a mandatory step in the way the attribution process naturally unfurls. It’s very hard to consider an intentional behavior without asking for the underlying motives of this action. This is exemplified by a pioneer experiment in the domain of psychological attribution, designed by Heider & Simmel in 1944. In this experiment, people watch a short film involving simple geometric figures (you can watch it here). Instead of simply describing the cinematic characteristics of the scene, people elaborate sophisticated scenarios. They say for instance that the small triangle is trying to rescue his girlfriend (the disc) from a bully (the big triangle). Such a narration is a concentrate of intentions and motivations, packed elegantly into a coherent string of events. For a human being it is the natural output of the perception of a sequence of movements that appear goal-directed and contingent upon each other.

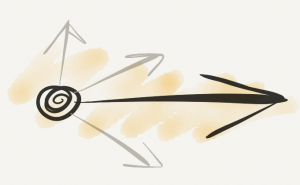

A narration is also a description of rational motives. A rationality principles states that an intentional agent adopts rational means to reach a goal. Seeing an object adjusting its behavior to external constraints indicates that it possesses the ability to select appropriate strategies to achieve a goal. For example, if an agent’s goal is to reach another object and there is no obstacle in its way, it would seem more reasonable for the first object to move in a straight line rather than to jump around. In the observer’s opinion, an object’s behavior appears all the more intentional if it conforms to this rationality principle.

Rationality:

Rationality:

this is the property of adopting the most efficient means to reach a goal, given the specific constraints of a situation. A principle of rational action entails that an intentional action functions to realize goal-states by the most efficient means available.

In terms of AI, a rational behavior is certainly more difficult to implement than a simple chasing behavior. But by taking into account principles of psychological attribution we can alleviate the computational requirements, by offloading critical inferences to the player’s brain. The intelligence doesn’t need to be in the algorithm. Rather we expect the mind of the observer to fill in gaps of the AI’s behavior. What’s needed is a proper implementation of contextual cues. For instance the cues necessary for an observer to interpret movement as goal-oriented actions. When Ashley is hiding in Resident Evil 4, her behavior looks rational. However it is only a scripted behavior activated in the presence of enemies. Rationality is not implemented in the underlying algorithm. It is the nature of the situation in which it is exhibited, and the player’s knowledge of what one is supposed to do in such a situation, that makes it that way.

Does it have mind-reading abilities?

Another layer of psychological attribution can be added when considering social behavior. Thomas was alone, but another fellow comes along. How is Thomas going to react? That depends on Thomas’ social skills and motivations. If Thomas was, let’s say a spider, he wouldn’t show much interest in a companion, and would mind his own business (or would try to eat it!). But if Thomas has human social skills, another guy in the room is an all different matter. Another guy in the room is someone you can interact with, chat with, making plans with, quarrel with, fight with. Another guy in the room may be just like you, someone able to recognize someone else as an intentional being.

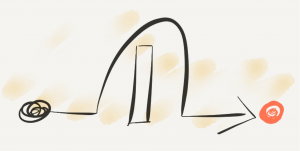

When considering social interactions, what we consider in fact is the capacity for an agent to act not only relative to its own goals but also relative to other agents’ goals. This is what ‘social’ means: a coordination of goals in a negotiated space. Imagine for instance a square and a triangle. The triangle tries to climb a slope —at least that’s what you infer from its movement and the context upon which the movement takes place. The square puts itself immediately behind the triangle and moves in the same direction. This gives it the appropriate push that the triangle needs to reach the plateau. You would probably conclude from this scene that the square wants to help the triangle. If we decompose into a sequence of cognitive operations: 1) you infer that the triangle wants to reach the plateau, 2) you infer that the square wants the triangle to reach the plateau, 3) you interpret the alignment of both goals in terms of a prosocial behavior, 4) you conclude that the square is friendly to the triangle. It turns out that even infants are sensitive to goal alignment. Several experiments have shown that infants as young as six months-old are sensitive to the altruistic nature of an action. They tend to prefer the object that ‘help’ the other object going up the slope, rather than the one that ‘hinder’ the other object from going up the slope by placing itself in front of it.

Goal alignment:

Goal alignment:

this is the extent to which an agent’s goal correspond to another agent’s goal. How friendly an agent looks will depend on the degree of goal alignment. An agent will be seen as helping another one if their respective goal are convergent; it will be seen as preventing another from doing something if their goals go in opposite ways; it will be seen as indifferent to another one if their goals are not aligned.

Implementing genuine social behavior in a NPC is a challenging task. A game AI would need to evaluate and predict the player’s current goal to adjust the NPC’s behavior. It would need to integrate different metrics to produce a model of the player’s personality and playing style. For instance the player’s pace, his reliance on certain tactics, or the options chosen in previous similar circumstances. In short the game AI would need to read the player’s mind, much as we humans attempt when interacting with each other. Of course there are some tricks developers can use to give the appearance of mind-reading. The game ‘knows’ the player’s goal when this is the only possible goal. The game ‘knows’ the player’s goal when for instance the player needs to find an item to unlock a portal, and this is the only thing he can do. At that point, a NPC can adjust its behavior to the player’s goal. This is what Yorda does when directing Ico’s attention toward important clues for the resolution of a puzzle. The pointing gesture is an example of goal alignment. It indicates the possibility to consider another agent’s perspective and influence his behavior in a manner that is relevant to the goal at stake.

Is that all?

I hope I have managed to convey the sense that there are certain rules to consider when designing an interesting companion. Those rules correspond to the process of psychological attribution. This is a process through which our brain recovers the structure of the social world. A world of goals, intentions, attempts, emotions, beliefs, or sentiments. Game designers tap intuitively into what the brain considers as satisfying causal connexions. But exposing these preferences may offer opportunities to refine NPCs’ behavior at little cost. I tried to give a brief sketch of this program, with the hope that it could contribute to spark life in a domain that deserves to be scouted out. Note that I have barely scratched the surface and much more needs to be said. What about emotions and personality? How are they conveyed through motion alone? What about empathy? How does an empathic relationship develops through time ? How does it relate to the process of psychological attribution? Surely there is still some land to be toiled to plant the seed of life.